Lift and Shift COTS to the AWS Public Cloud

The Scenario

As organisations look to detach themselves of their costly on-premise data centres, focus turns to not just moving applications, but improving software lifecycle management, security, reliability, availability and recovery of applications in more agile and automated ways, and reducing risk and cost of these activities.

In this example, Modis took a Web Identity Management system comprising multiple COTS components, and applied best practice to a Cloud deployment. This system front-ends the authentication and authorisation for multiple public-facing services, each of which have large registered user base (>50,000).

The COTS components included:

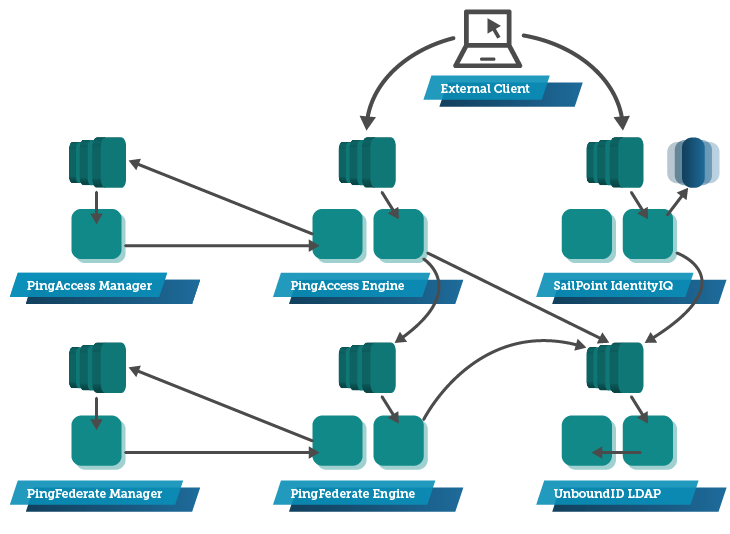

- PingAccess Engine, a reverse proxy identity management service, and PingAccess Manager for managing a PingAccess cluster.

- PingFederate Engine, an authentication engine, and PingFederate Manager for managing a PingFederate cluster

- SailPoint, a user management interface

- UnboundID, a clustering (and replicating) LDAP server

The Solution

The following AWS Services were used in this delivery:

-

CloudFormation, to template the creation of all resources, with parameters to reference:

- versions of software

- names of S3 Buckets for software repositories

- subnet IDs to use within a VPC

- AutoScale to create EC2 instances, either as a single instance (min size = 1, max size = 1) for management nodes (“There can be only one”)

- IAM Instance Profiles, IAM Roles and Policies for providing auto-rotating credentials and service-specific access permissions

- Elastic Load Balancing, providing a reliable front-end interface for traffic, including the termination of HTTPS traffic on the ELB tier permitting the use of…

- Amazon Certificate Manager, automatically creating valid X.509 SSL certificates, that get replaced at expiry automatically

- CloudWatch Logs retains logs from the various applications on the instances.

Figure 1 – The complete deployment

The UnboundID LDAP Cluster

This component lies at the back end of the solution and is the first component deployed: it is a LDAP directory store that deploys (replicates) across multiple hosts.

A CloudFormation template creates an AutoScale Group, an internal-facing Elastic Load Balancer, and a Route53 DNS record associating a DNS name to the load balancer.

The Security Group for the EC2 instances permits traffic only from the Elastic Load Balancer, while the Security Group for the Elastic Load Balancer itself permits traffic from the internal clients on port 636 (LDAPS) only.

The AutoScale Group launches EC2 Instances into IAM Roles, which provides rotating credentials and policy permissions for the EC2 Instances to access S3, from where software and configuration scripts are fetched. VPC configuration (done separately by an earlier template) facilitates this by way of an S3 Endpoint.

The EC2 Instance setup uses the AWS CLI to consult the EC2 API and finds its peers by searching Instance tags, to which it then connects directly to and replicates the data set to become a fully operational LDAP member server. If no peers are found, then the Instance is instructed to look in a specific S3 Bucket prefix for a configuration and backup data set to load, thereby (re-)establishing a cluster.

After joining or establishing a cluster, the Instance then periodically creates a fresh backup to S3, so the cluster can be recovered in future if needed.

The launched EC2 Instances submit logs to a CloudWatch Logs Log Group. The Log Group is defined by the CloudFormation template with a set retention period.

Route53 is updated to set an ALIAS record for the LDAP ELB as the entry point for dependent components (the rest of the solution).

The Elastic Load Balancer is configured to use a specific Certificate issued by Amazon Certificate Manager, matching the DNS name generated into Route53. This ensures the certificate is always valid without requiring staff to maintain it, and that clients can trust the endpoint The Load Balancer is also configured with specific SSL Policies (Protocols, Ciphers), and performs access logging to an S3 Bucket.

The UnboundID CloudFormation template was designed to allow multiple LDAP installations within the same VPC and domain. This was required as older applications still required access to historical LDAP user stores. The older LDAP directories were migrated to UnboundID and the schema and LDIF files stored seperatly within the S3 Bucket.

SailPoint Identity IQ User Management Service

This web application lets administrative users manage user attributes and permissions across the range of relying applications. It consists of a relational database, and a fleet of application servers behind a load balancer launched from another CloudFormation template:

Once again, the security group for the EC2 instances permits traffic only from the Load Balancer, and the security from the Elastic Load Balancer permits only HTTPS access.

The AutoScale group launches EC2 Instances into IAM Roles for the SailPoint instances, similar to the LDAP servers (above) permitting access to S3 to fetch software and setup scripts. The setup scripts configure the connection strings for the EC2 Instance to connect to an RDS database.

Again, the launched EC2 instances submit logs to a CloudWatch Logs Log Group with a set retention period.

A public-facing ELB is configured to use a specific Certificate from Amazon Certificate Manager, and the ELB is configured with specific SSL Policies (Protocols, Ciphers), and access logging to S3.

Again, Route53 is updated to set an ALIAS record for the SailPoint ELB.

The SailPoint application uses AWS Simple Email Service to send emails related to user management functions. These include password resets, Access Role approval request and confirmation of Access Role changes.

PingFederate – Engine and Manager

The architecture of PingFederate is to have a fleet of “Engine” hosts that handle customer requests, and a single “Management” hosts that purely assist with clustering and configuration of Engine hosts.

The Manager node is a simple AutoScale group with minimum size 1, and maximum size 1, and an ELB. The Instance(s) again have an IAM Role, fetch software and scripts from S3 on boot, and submit logs to CloudWatch Logs. The internal-facing ELB has SSL certificates from ACM, strict SSL Polices, ELB Access Logging to S3. The Manager node creates a cluster configuration file that is loaded automatically from a definded S3 Bucket. The S3 Bucket contains a Bucket Policy to protect the file and only allow the Manager and Engine nodes access.

The Engine nodes similarly start up in an ASG (with larger minimum and maximum fleet size), and reads the cluster configuration file from the S3 Bucket. This file contains the details for the node to join the cluster. The Engine node will update the cluster file with their details to let other Engine nodes and the Manager node to register their existence and find their peers.

Once the new Engine nodes have registered their existence, the Manger node monitors them and updates them directly on the internal address space (not via the ELB).

Part of the configuration of the Manager is to tell it where its SailPoint Identity IQ service is (hostname, port, protocol), and where its back-end LDAP store is (LDAPS).

PingAccess – Engine and Manager

Similar to the PingFederate deployment, the PingAccess reverse proxy consists of a Manager host, and a fleet of Engine hosts. The Engine nodes are behind a public-facing ELB, with SSL terminating on the ELB, and Amazon Certificate Manager issuing a certificate to it.

As above, the Manager node is defined first, with a Route53 DNS name for the ELB in front of the Manager host.

As the Engine nodes start, they register against the Manager node using an API call to the DNS address of the Manager Node (on the ELB). The API call allows them to download a configuration file to setup the node to the cluster, then start processing external traffic being sent to them.

Scale Out and Fault Tolerance

The architecture is spread across three Availability Zones (an AZ). As AutoScale spawns instances – during a rolling update (replace) across the fleets or after an instance failure – it tries to keep an event distribution of resources across AZs. Should an entire AZ fail, then the TWO remaining AZs can be used to satisfy replacement capacity.

The Outcomes and Benefits

The application deployment can be managed from CloudFormation, making it repeatable in non-production and production environments and reducing risk of deployment. The use of AutoScale and ELB makes this a robust and self-healing deployment, while the use of VPC, EC2 Security Groups, and ACM makes this a trusted and secure deployment. This has been achieved without modification of the COTS components themselves.

NB: Not all security considerations are disclosed here, for operational reasons. Details such as protocols, ciphers, algorithms, and services used are subject to change, based upon changing threats over time, and innovations in architecture and services.